When I read a book I have the habit of highlighting certain passages I find interesting or useful. After I finish the book I’ll type up those passages and put them into a note on my phone. I’ll keep them to comb through every so often so that I remember what that certain book was about. That’s what these are. So if I ever end up lending you a book, these are the sections that I’ve highlighted in that book. Enjoy!

Moore’s Law proclaims that the power of computer processors doubles roughly every two years. This means that today’s computers are five thousand times more powerful than the ones available when the book was first written.

With doors that push, put a vertical plate on the side to be pushed. The vertical plate and supporting pillars are natural signals, naturally interpreted, amking it easy to knwo just what to do: no labels needed.

The problem with the designs fo most engineers is that they are too logical. We have to accept human behavior the way it is, not the way we would wish it to be.

We design things for people, so we need to understand both technology and people.

Designers need to focus their attention on the cases where things go wrong, not just on when things work as planned.Visible affordances provide strong clues to the operations of things. Knobs afford turning, pushing, and pulling.

My favorite example of a misleading signifier is a row of vertical pipes acorss a service road that I once saw in a public park. The pipes obviously blocked cars and trucks from driving on that road. But, I saw a parkl vehicle simply go through the pipes. Huh? The pipes were actually made of rubber, so vehicles could simply drive right over them.

Ever watch people at an elevator repeatedly push the Up button, or repeatedly push the pedestrian button at a street crossing? Ever drive to a traffic intersection and wait an inordinate amount of time for the signals to change, wondering all the time whether the detection circuits noticed your vehicle? What is missing in all these cases is feedback: some way of letting you know that the system is working on your request.

Feedback must be immediate: even a delay of a tenth of a second can be disconcerting.

Poor feedback can be worse than no feedback at all, because it is distracting, uninformative, and in many cases irritating and anxiety-provoking.

In the store, the purchaser focuses on price adn appearance, and perhaps on prestige value. At home, the same person will pay more attention to functionality and usability.

People don’t want to buy a quarter-inch drill. They want a quarter-inch hole. But why would anyone want a quarter-inch hole? Clearly that is an intermediate goal. Perhaphs they wanted to hang shelves on the wall. Once you realize that they don’t really want the drill, you realize that perhaps they don’t really want the hole either. They want to install their bookshelves.

Conscious attention is necessary to learn most things, but after the inital learning, continued practice and study, sometimes for thousands of hours over a period of years, produces what psychologists call “overlearing”. Once skills have been overlearned performance appears to be effortless, done automatically, with little or no awareness.

In the house you lived in three houses ago, as you entered the fron door, was the doorknob on the left or right?

The visceral system allows us to respond quickyl and subconsciously, without conscious awareness or control.

Visceral and behavioral levels are subconscious and the home of basic emotions. The reflective level is where conscious thought and decision-making reside.

For designers, the visceral response is about immediate perception. This is where the style matters: appearances, wether sound or sight, touch or smell, drive the visceral response. this has nothing to do with how usable, effective, or understandable the product is. It is all about attraction or repulsion.

Engineers and other logical people tend to dismiss the visceral response as irrelevant, but all of us make these kinds of judgements, even those very logical engineers.

Because everyone perceives the fault to be his or her own, nobody want to admit to having trouble. This creates a conspiracy of silence, where the feelings of guilt and helplessness among people are kept hidden.

Do not blame people when they fail to use your products properly. take people’s difficulties as signifiers of where the product can be improved.

Try eliminating all error messages from electronic or computer systems. Instead, provide help and guidance. Make it possible to correct problems directly from helkp and guidance messages. Allow people to continue with their task: Don’t impede progress–help make it smooth and continuous. Never make people start over. Assume that what people have done is partially correct.

Nobody likes to be observed performing badly.

Example: How come nobody ever said anything about the issue? Well, when the system stopped working or did something strange, they dutifully reported it as a problem. But when they made the Return versus Enter error, they blamed themselves.

Don’t criticize unless you can do better.

To use our knowledge in the head, we have to be able to store and reterieve it, which might require considerable amounts of learning. Knowledge in the world requires no learning, but can be more difficult to use.

When searching for the reason, even after you have found one, do not stop. Ask why that was the case. And then ask why again. Keep asking until you uncovered the true underlying causes.

The tendency to stop seeking reasons as soon as a human error has been found is widespread.

When someone says “It was my fault, I knew better” this is not a valid analysis of the problem. That doesn’t help prevent its recurrence.

If the system lets you make the error, it is badly designed. And if the system induces you to make the error, then it is really badly designed.

In many industries, the rules are written more with a goal toward legal compliance than with an understanding of the work requirements. A major cause of violations is inapproproate rules of procedures that not only invite violation but encourage it.

A slip occurs when a person intends to do one action and ends up doing something else. With a slip, the action performed is not the same as the action that was intended.

A mistake occurs when the wrong goal is established or the wrong plan is formed. From that point on, even if the actions are executed properly they are part of the error, because the actions themselves are inappropriate- they are part of the wrong plan.

Splis occurs when the goal is correct, but the required actions are not done properly. Mistakes occur when the goal or plan is wrong.

Slips often result from a lack of attention to the task. Skilled people – experts – tend to perform tasks automatically, under subconcious control. Novices have to pay considerable concious attention, results in a relatively low occurance of slips.

Most objects don’t need precise descriptions, simply enough precision to distinguish the desired target from alternatives. This means that a description matthat usually suffices may fail when the situation changes so that multiple similar items now match the description.

Using a bank or credit cad to withdraw money from an automatic teller machine, then walking off without the card, is such a frequent error that many machines now have a forcing function: the card must be removed before the money will be delivered.

With pens, the solution is imply to prevent their removal, perhaps by chaining public pens to the counter.

Mode errors are especially likely where the equipment does not make the mode visible, so the user is expected to remember what mode has been established, sometimes hours earlier, during which time many intervening events might have occured.

Human teams and automated systems have to be thought of as collaborative, coopertive systems. Instead, they are often built by assigning the tasks that machines can do to the machines and leaving the humans do the parts that are wasy for people, but when the problems become complex, which is precisely when people could use assistance, that is when the machines usually fail.

Designing an effective checklist is difficult. You must continually adjust the list until it covers the essential items yet is not burdensome to perform. Many people who object to checklists are actually objecting to badly designed lists.

In an assembly line at Toyota, if a worker notices something wrong, a special cord is pulled that stops the assembly line and alerts the experts crew. Experts converge upon the problem area to determine the cause. “Why did it happen?” “Why was that?” “Why is that the reason?” The philosophy is to ask “Why?” as many times as may be necessary to get to the root cause of the problem and fix it so it can never occur again.

I went around with a pile of small, circular, green stick on dots and put them on each door beside its keyhole, with the green dot indicating the direction in which the key needed to be turned.

Once people find an explanation for an apparent anomaly, they tend to believe the can now discount it. Distinguishing a true anomaly from an apparent one is difficult.

The psychologist Baruch Fischoff has studied explanations given in hindsight, where events seem completely obvious and predictable after the fact but completely unpredictable beforehand. Fischoff presented people with a number of situations and asked them to predict what would happen. When the outcome was not known by the people being studied, few predicted the actual outcome. When the outcome was known, it appeared to be plausible and likely and other outcomes appeared unlikely. Hindsight makes events seem obvious and predictable.

The next time a major accident occurs, ignore the inital reports from journalists, politicians, and executives who don’t have any substantive information but feel compelled to provide statements anyway. Wait until the offical reports come from trusted sources. This could be months of years after the accident, and the public usually wants answeres immediately, even if those answers are wrong. When the full story finally appears, newspapers will no longer consider it news, so they won’t report it.

During the critical phases of flying–landing and takeoff–the FAA requires what it calls a “Sterile Cockpit Configuration” whereby pilots are not allowed to discuss any topic not directly related to the control of the airplane during these critical periods.

Electronic systems have a wide range of methods that could be used to reduce error. One is to segerate controls, so that easily confused controls are located far from one another. Another is to use separate modules, so that any control not directly relevant to the current operation is not visible on the screen, but requires extra effort to get to.

Person: Delete “my most important file.”

System: Do you want to delete “my most important file”?

Person: Yes.

System: Are you certain?

Person: Yes!

System: “My most important file” has been deleted.

Person: Oh. Damn.

The above request for confirmation seems like an irritant rather than an essential safety check because the person tends to focus upon the action rather than the object that is being acted upon. A better check would be a prominent display of both the action to be taken and the object, perhaps with the choice of “cancel” or “do it”.

Suppose I intended to type the word We, but instead of typing Shift + W for the first character, I typed Command + W, the keyboard command for closing a window. Because I expected the screen to display an uppercase W, when a dialog box appeared asking whether I really wanted to delete the file, I would be surprised, which would immediately alert me to the slip.

Reason’s Swiss Cheese Model of Accidents

If every slice of cheese represents a condition in the task being done, an accident can happen only if holes in all four slices cheese are lined up just right any leakage–passageway through a hole–is most likely blocked at the next level. The metaphor illustrates the futility of trying to find the one underlying cause of an accident (usually some person) and punishing the culprit. Instead, we need to think about systems, and devise ways to amek the systems, as a whole, more reliable. This is why an attempt to find “the” cause of an accident is usually doomed to fail. The swiss cheese metaphor suggests several ways to reduce accidents:

- Add more slices of cheese.

- Reduce the number of holes (or make the existing holes smaller).

- Alert the human operators when several holes have lined up.

Most professions that involve the risk of death or injury have similar regulations about drinking, sleep, and drugs. But everyday jobs do not have these restrictions.

We should deal with error by embracing it, by seeking to understand the causes and ensuring they do not happen again. We need to assist rather than punish or scold.

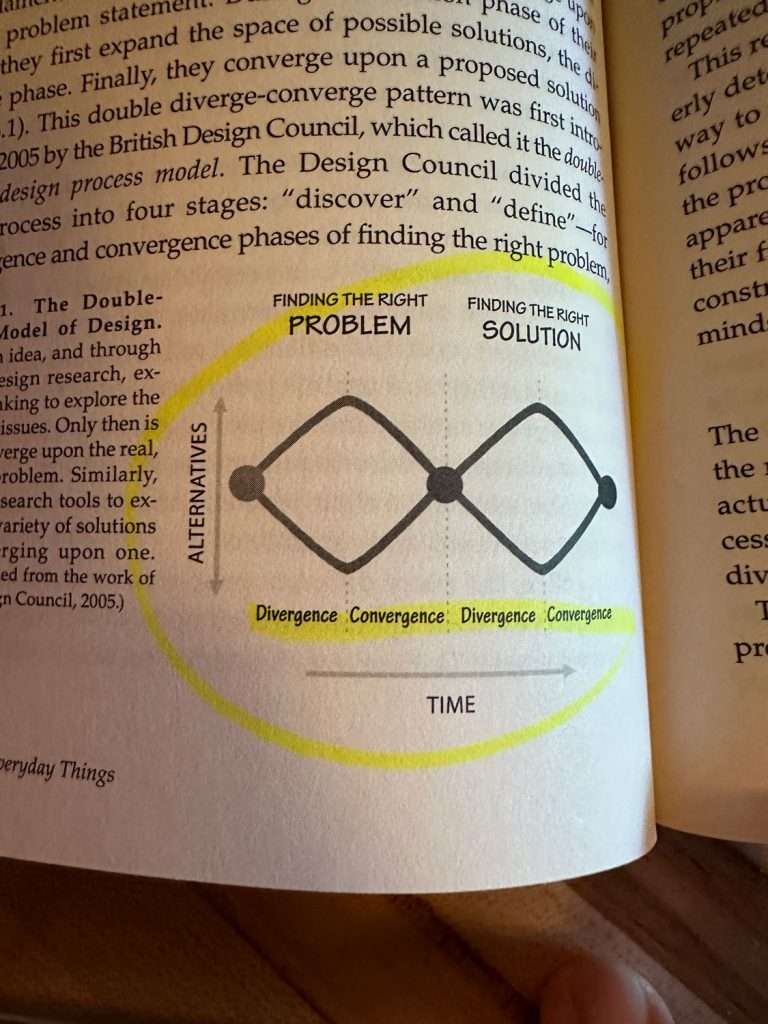

A brilliant solution to the wrong problem can be worse than no solution at all: solve the correct problem. Good designers never start by trying to solve the problem given to them: they start by trying to understand what the real issues are.

Generate numerous ideas. It is dangerous to become fixated upon one or two ideas too early in the process.

When people are asked what they need, they primarily think of the everyday problems they face, seldom noticing larger failures, larger needs. They don’t question the major methods they use.

@joekotlan on X